After my almost two decades in the Market Research industry, Quality being spoken about in headlines, conferences, and client meetings is nothing new to me. Two years since launching PureSpectrum in the APAC region, Quality has been at the forefront in each and every conversation. However, what is NEW as 2023 begins has been a concerning claim that online supply is systemically poor, rife with fraudulent characters and shady organizations, and flooded with link tampering and bot programs.

The industry governing bodies have since rallied a call to action, coordinating, I am sure, to present clear guidance on what can be suggested moving forward. However, as technology and solution providers, self-governance and standard setting is our responsibility as a duty of care to our customers plus our own future-proofing. Recommendations and guidance are always welcomed as part of the bigger conversation but change needs to come from within.

As a quality-focused marketplace, PureSpectrum takes ownership of quality before and after interacting with our technology and team members. To do otherwise ultimately devalues our solution and suggests we are not in control of the platforms and processes we create. Thankfully, may I be the first if you have not heard but these issues are not systemic to the point that global reform is required, at least from our perspective. Yes, indeed these problems are very real but vigilance, preparation, reaction plus the employment of the right tools will present the buyer with a VERY different outlook vs. what the headlines suggest.

To present an alternative view of the ‘state of the industry’, in coordination with Mark Menig, PureSpectrum’s Chief Product Officer, and Sushma Vasudevan, PureSpectrum’s Vice President of Analytics and Data Science, we embarked on the review of more than 3 years worth of our own reconciliation (or reversal) data and more than 10 billion sessions contributing to PureScore™ and Quality ‘blocks’ for the same period. Our goal was to understand market by market, region by region what story the data told us so we could present that back to our teams and our customers to provide real confidence moving forward.

At no time was there an expectation that our data would suggest PureSpectrum is perfect, in fact, the exercise is one that in different variations we undertake time and time again to learn, re-learn, fail, and fail again to move forward, and improve. For every advancement in technology or solution, there is an equally opposing force looking to find loopholes and expose weaknesses so this cannot be a one-off exercise. This is the nature of the task at hand that we willingly accept with our heads held high and sleeves rolled up.

In what has always been our nature PureSpectrum provides a transparent view of our findings (the good and the bad) for all to see. Ultimately top level there are three stories to share:

- Quality output requires a quality-first mindset.

- The PureScore™ effect since inception – after 10 billion sessions.

- Our reconciliation journey over the past three years.

Quality Output Requires a Quality-First Mindset

Quality represents one of the core pillars of PureSpectrum. Conscious of this from the platform build stage there has always been a constant review of how supply (new and existing) is vetted and introduced to the marketplace. We have taken the standpoint that there are no ‘good’ or ‘bad’ suppliers as these are the summation of panelists who can vary over their lifetime. Yes, some sources can have more concentration of low-scoring panelists than others however these tend to be isolated cases rather than the norm which sees a combination of both.

PureScore™ was created with this understanding and specifically created to serve a multi-sourced landscape. Whilst consolidation has happened at speed at the organisational level of late, supply sources have always been this way. In the early years of online (2002-2004) it was not unheard of to see more than a 60% overlap of panels operating in the same market and this situation has not changed in essence. What has changed is the ability to de-duplicate, recognise and monitor at a respondent level (via device, IP, and other identifiers). In its first iteration PureScore™ introduced for the first time behaviour based machine learning to look at key metrics such as:

- Consistency of key responses

- Response / Conversion rates

- Survey completion time vs. Median expectation

- Open End suitability

At the first point of contact between a newly introduced respondent and our Marketplace, an initial screener requesting key ‘stable’ benchmarking questions is presented. Based on these responses a scoring process can commence which like supply in general is constantly evolving. After several iterations of our PureScore™ screener and client surveys the accuracy of a respondent’s PureScore™ improves and over time becomes a very reliable indicator. Given that there can be significant challenges when large amounts of new respondents (i.e. a new supply source) are introduced we maintain overarching control levers to optimize but also minimize impact when doing so. In short, everything needs to be and is controlled but it can never be ‘perfect’ as it is constantly evolving. This is also why a constant feedback loop is required with our customers. Some of this is automated through the survey itself (i.e. red herring, trap, and consistency questions) and some verbatim through customer discussion and review.

The end result of PureScore™ is the ability to measure quality in its constant fluid state, however given this nature, it’s important that we look at the big picture rather than just at the project level which by nature will only give a snapshot of the situation.

The PureScore™ Effect, what have we learned after 10 billion contributing sessions?

Three years on from the introduction of PureScore™ we have an outstanding amount of data that helps us and our customers move forward. It is truly difficult to fathom 10 billion data points of anything but that is the fortunate position we are in.

Note that this data is representative of what happens before a respondent enters a customer survey and is a direct result of automated feedback. This is part of the process that our customers do not see or feel in their day-to-day experience so this may be surprising to some.

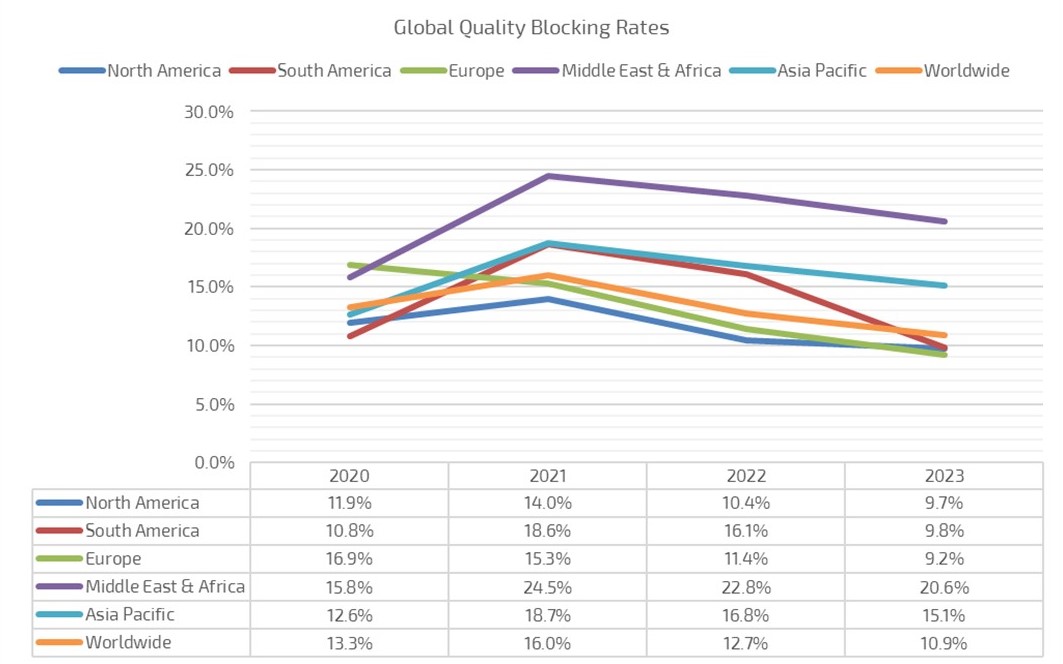

What we can derive quite clearly is that after the initial ‘shock’ of PureScore™ we start to see the downward trend of quality ‘blocking’. There are a multitude of reasons here including:

- Lack of unique supply (regardless of supply source)

- The ability to remove those that have failed previously (suppliers no longer send to these)

- An aggressive supplier vetting and onboarding process

Whilst there is a worldwide trend downward from 2021 this is not to say that all markets show this. 25% of our markets in fact show a slight uptick in blocking 2022-23. A significant proportion of these can be attributed to lower volumes of transactions or not enough diverse supply in specific markets but most are an accurate reflection of challenges faced from either respondent behaviour or survey bots/farms in markets such as India, Australia, the UK, Switzerland, Thailand, Vietnam and Egypt to name a few. These are all key markets with high demand but also highly contested supply. It isn’t a surprise that these situations occur given the competition and creative solutions employed to ‘hack’ the system. However, what is clearly obvious is that we are catching and limiting more and more of these in real-time before they become problematic for our customers.

It should be noted that the five regions stated are on different stages of the journey as well as differing types of supply sources, internet / mobile penetration, online adoption, etc. so a straight comparison cannot be made in reality. But this certainly paints an interesting picture for those that do not have PureScore™ or similar quality measures in place already. The challenge proves to be very real if this is not happening.

As we are seeing an overall downward trend of ‘blocking’ what impact does that have on reconciliations/reversals across the same period?

Our Reconciliation Journey from 2020 to 2023

Definition: Reconciliation, for this purpose, is defined as a completed survey response that is deemed by our clients to be unacceptable and therefore NOT part of the final dataset. This could be for many reasons including poor OEs, illogical answers, speeders or others when considered against norm data.

It is important to note that PureSpectrum does not ‘limit’ the number of reconciliations our customers can do, in fact for the majority of our clients who engage via our self-serve Marketplace Platform this is a completely self-owned process. Internally we have a benchmark of expectations and anything over and above that we work with our customers to investigate, understand, and then provide feedback to our suppliers. Reconciliations along with countless other survey exit ‘statuses’ contribute directly back into a respondent’s PureScore™ and the cycle continues.

Whilst we are not able to comment on what other marketplaces and suppliers are facing when it comes to reconciliations, our perspective is there is no reason to be raising alarm stating supply is broken! In fact, taking into consideration PureSpectrum’s growth over the same period, the addition of new markets and suppliers is quite the opposite.

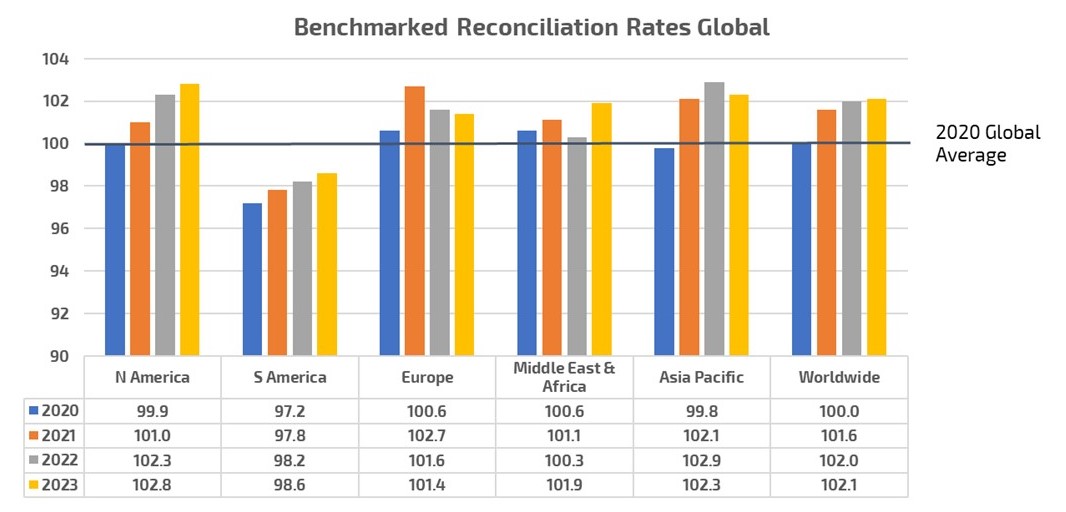

Taking 2020 as the starting point (PureScore™ was launched in 2019)

- Since 2020 we have seen global reconciliation rates increase by 2.1%

- In the last 14 months, we have seen a 0.1% increase

In the last 3+ years, 25% of our marketplace markets have seen an increase in their quality blocking and in the same period we have seen 65% of markets increase their reconciliations. However, this still only accounts for 2.1% overall. This doesn’t sound like dire straits to me? But without the appropriate tools, quality blocking plus reconciliations would lead to very uncomfortable conversations with clients.

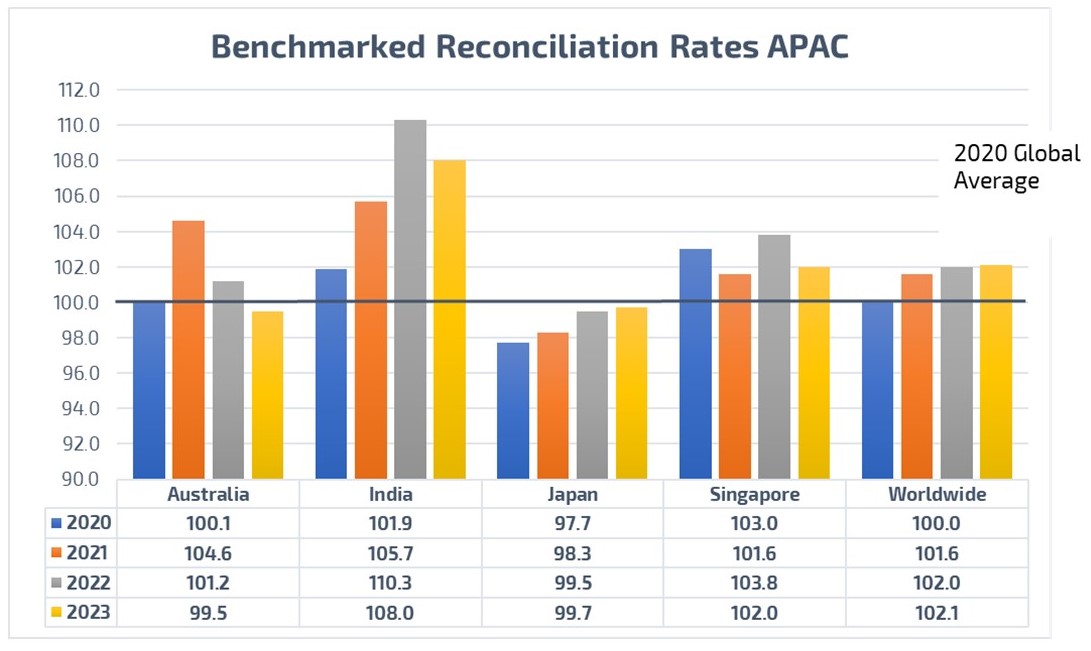

Specifically diving into some key APAC markets:

In APAC, Australia and India specifically are two of the most highly contested and scrutinized markets when it comes to quality. Despite there being an increase in challenges in these markets over the last 14 months the reconciliation rates y-o-y are down for both.

Australia has seen its quality blocking slightly increase (by 0.6%) over the past 14 months; however, reconciliations decrease year after year. Having just returned from a week in Sydney with suppliers and clients this is far from the 25-70% range clients are talking about with some of their incumbent providers (yes, that range again was 25-70%). Australia is a somewhat closed ecosystem when it comes to supply (and overlap of it across providers) so this is not a surprise for us but it may well raise some eyebrows for others.

For India, these numbers are ultimately less attractive but this is the reality of the market. This market is challenged by an abundance of new suppliers and is often targeted specifically by bot and survey farms to a greater extent than others. Blocking rates here have increased 10 percentage points since 2020 and as illustrated here so have the reconciliation rates until 2023.

Being transparent about this is key and there needs to be an understanding between buyer and supplier in these instances. Wherever possible quality-type questions (i.e. trap, red herring, honey pot) will be placed within the survey itself, and fails will automatically be termed quality fails. Having a proportion of bad respondents isn’t to be unexpected, but not utilising the right tools to catch them before the end of fieldwork is not good practice.

In addition, it should be noted, the ideas of ‘proprietary’ and ‘market research only’ panels no longer exist outside of some singular examples. Instead, we predominantly act as facilitators for supply in an ever-increasing, cost-pressured environment. To an extent, if the market continues to require $1 cost per interview then the resulting quality will be equal to this. Let’s not sugarcoat the reality when the ‘cheapest’ solution wins out.

Summary

In alignment with the industry consensus, quality is a major issue and one that needs round-the-clock attention. Based on the data we have collated, yes, reconciliations/reversals are up but for PureSpectrum this represents 2.1%. This rate will not impact our overall performance.

As an industry we need to be more informed and transparent, which means research buyers as well as suppliers. We all need to understand our roles from survey design to data delivery but as individual organisations we need to take more ownership of what we CAN control.

PureSpectrum began its journey three years ago and our quality-first initiative from the day of inception, the issue of quality has never been a non-issue but pushing responsibility to others is not the solution. If not already, the time to look inwards is now when looking for accountability and resolution.

Is online supply really on the decline? In short, no. At least no more so than it has been in the past few years. Yes, the landscape has been more challenging, but this has largely been counteracted by the advancements in technology. PureSpectrum is proud to share what we have achieved and will happily continue to do so. Quality is not and cannot be a ‘dirty little secret’, there is no reason to deny it affects us all but it’s clear it does affect some more than others.

About PureSpectrum

PureSpectrum offers a complete end-to-end market research and insights platform, helping insights professionals make decisions more efficiently, and faster than ever before. Awarded MR Supplier of the Year at the 2021 Marketing Research and Insight Excellence Awards, PureSpectrum is recognized for their industry-leading data quality. PureSpectrum developed the respondent-level scoring system, PureScore™, and believes its continued success stems from its talent density and dedication to simplicity and quality. In the few years since its inception, PureSpectrum has been named one of the Fastest Growing Companies in North America on Deloitte’s Fast 500 since 2020, twice placed in the Top 50 of the GRIT Most Innovative List, and ranked twice on the Inc. 5000 list.